Overview of VoxPoser

2023-07-12

LLMs’ Code-Writing Capabilities Enable Them to Control Robotic Actions

The title of this paper is “VoxPoser: Composable 3D Value Maps for Robotic Manipulation with Language Models,” authored by the research team of Fei-Fei Li from Stanford University and the University of Illinois Urbana-Champaign.

This paper is a practical exploration in the field of Embodied AI. The goal of Embodied AI is to create agents like robots that can learn to creatively solve challenging tasks that require interaction with the environment. These agents can complete a variety of tasks in the real world through seeing, talking, listening, acting, and reasoning.

[2]

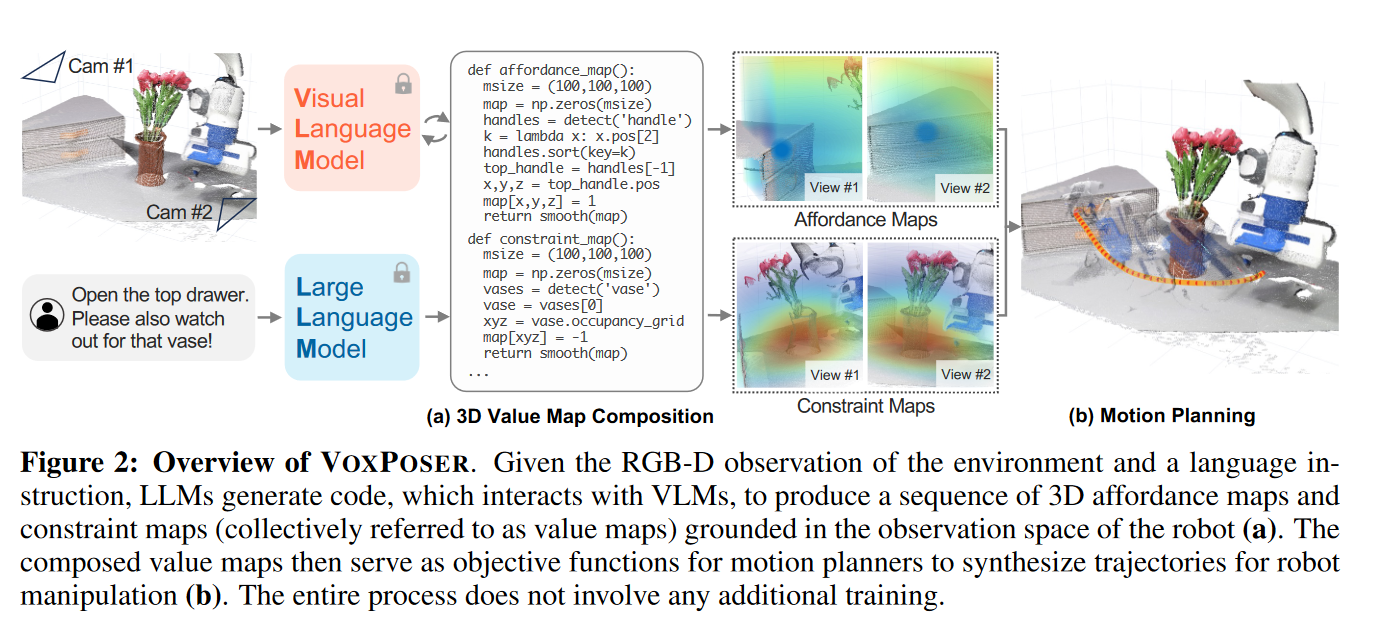

VoxPoser is a system that connects multiple endpoints. It leverages the natural language interaction capabilities of Large Language Models (LLMs, such as GPT) to convert human natural language into computer-understandable conditional action primitives and constraints. These transformed semantic information can generate compliant Python code, which can guide Visual Language Models (VLMs) to construct 3D Value Maps (3D Value Map = Affordance Map + Constraint Map) under sensor-acquired information (RGB-D environmental observations), mapping external knowledge into the robot’s observation space. Using this map, the robot can complete corresponding actions. VoxPoser bridges the gap between robotic operations and natural language instructions, enabling robots to perform a variety of daily operational tasks.

In previous research on robotic operations, most still rely on predefined action primitives to physically interact with the environment. This dependency is the main bottleneck of current systems, as it requires a large amount of robot data. VoxPoser offers a solution by utilizing the rich internal knowledge of LLMs to generate robotic operation trajectories without the need for extensive data collection or manual design for each individual primitive.

The main contribution of VoxPoser lies in its innovative framework, capable of zero-shot generating closed-loop robotic trajectories that respond to dynamic disturbances. Without any additional assistance (data, examples, limited action sets), the system input is any natural language (e.g., “Pick up the white rice grains on the ground and clean them before putting them in a clean bag in the kitchen.”), and the output is the corresponding machine action. The machine can adapt to various different environments and changing environments, demonstrating a certain level of robustness. Additionally, although the model is static, the framework can also achieve efficient learning (e.g., learning to open a door with a lever in 3 minutes).

Example:

For instance, given the instruction “Open the top drawer, be careful with the vase,” the LLM can infer: 1) The handle at the top of the drawer should be grabbed; 2) The handle needs to be pulled outward; 3) The robot should stay away from the vase.

When this information is expressed in text form, the LLM can generate Python code, call perception APIs to obtain spatial geometric information of relevant objects or parts (like “handle”), and then process the 3D voxels, specifying rewards or costs at relevant positions in the observation space (e.g., high value assigned to the target position of the handle, low value around the vase).

Finally, the composed value map can serve as the objective function for the motion planner, directly synthesizing the robot trajectory to fulfill the given instruction, without requiring additional training data for each task or LLM. Figure 1 is a schematic diagram and a subset of the tasks we consider.

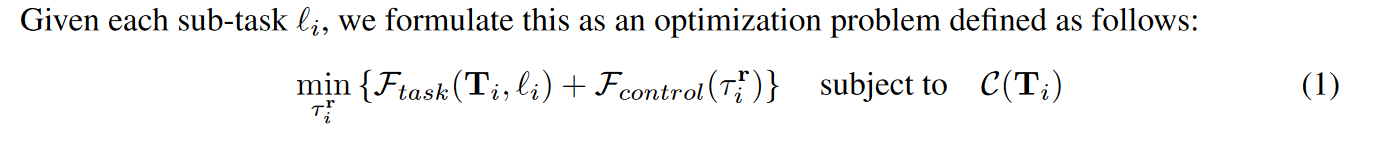

Where $ \mathscr l_i $ is the $ i^{th} $ $ \mathscr L $, i.e., the sub-task statement segmented by (LLMs or search-based planner). $ \mathbf T_i $ is the environmental state at time $ i $. $ \tau^\mathbf r_i \subseteq \mathbf T_i $ is a series of robotic action trajectories, $ C(\mathbf T_i) $ represents the relevant constraints (dynamics and kinematics constraints). $ F_{task} $ and $ F_{control} $ represent the quality score of task $ \mathscr l_i $ being completed by $ \mathbf T_i $, and the manipulation cost (control cost, including action and time costs), respectively.

Example:

$ \mathscr L $ = Open the top drawer

$ \mathscr l_1 $ = Grab the handle of the top drawer

$ \mathscr l_2 $ = Pull open this drawer

Reasons for Success:

- Like GPT-4’s code Interpreter, VoxPoser aims to leverage the code-writing capabilities of LLMs, thereby easily obtaining reasonable guarantees—erroneous code cannot be executed.

However, we find that LLMs excel at inferring language-conditioned affordances and constraints, and by leveraging their code-writing capabilities, they can compose dense 3D voxel maps that ground them in the visual space by orchestrating perception calls and array operations.

In the very near future, such systems can be applied to more powerful, more personalized machine agents. For example, integrating dishwashers and other furniture into robots to create an all-capable domestic robot.

(Generated by GPT-4)

References:

- [1] Quantum Bits

- [2] https://embodied-ai.org/